Abstract

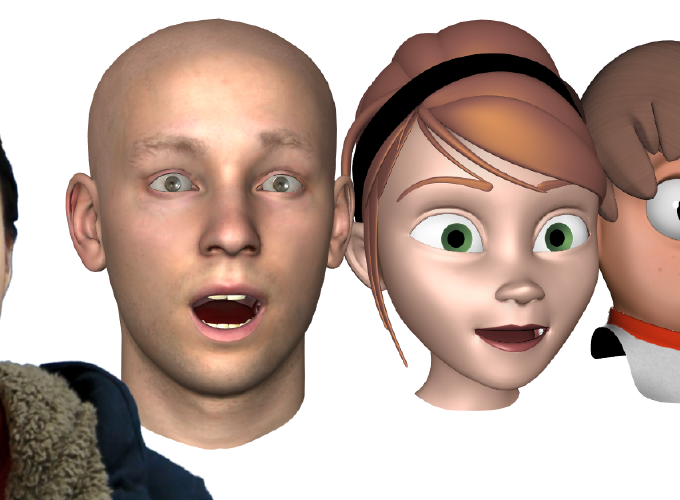

While facial capturing focuses on accurate reconstruction of an actor’s performance, facial animation retargeting has the goal to transfer the animation to another character, such that the semantic meaning of the animation remains. Because of the popularity of blendshape animation, this effectively means to compute suitable blendshape weights for the given target character. Current methods either require manually created examples of matching expressions of actor and target character, or are limited to characters with similar facial proportions (i.e., realistic models). In contrast, our approach can automatically retarget facial animations from a real actor to stylized characters. We formulate the problem of transferring the blendshapes of a facial rig to an actor as a special case of manifold alignment, by exploring the similarities of the motion spaces defined by the blendshapes and by an expressive training sequence of the actor. In addition, we incorporate a simple, yet elegant facial prior based on discrete differential properties to guarantee smooth mesh deformation. Our method requires only sparse correspondences between characters and is thus suitable for retargeting marker-less and marker-based motion capture as well as animation transfer between virtual characters.